What AI taught me about learning

In my last post, I talked about how Jason Wei’s article has influenced my career planning. Strongly recommend to read this one first if you haven’t yet:

This time, I want to share how his ideas changed how I think about learning. These reflections come from two of his essays plus a few of my own experiments.

“Asymmetry of Verification and Verifier’s Rule”

“Life Lessons from Reinforcement Learning”

1. Run reinforcement learning on yourself

In reinforcement learning, there’s a key idea: stay on-policy — meaning, learn from your own real feedback instead of copying someone else’s path.

AI can never surpass another AI just by imitation. Humans can’t either.

We use role models in the beginning of learning. They help us get started faster. But real growth happens when you experiment, fail, reflect, and iterate in real environments with real stakes.

That’s where you collect firsthand rewards and penalties. That’s how you sharpen your instincts, find your edge, and uncover what truly drives you.

It’s also why “build in public” or “learn in public” works so well nowadays. When you expose your work to feedback loops, you accelerate learning.

2. Build a verification system

Jason’s Verifier’s Rule says: the difficulty of AI learning depends on how verifiable the task is.

The same applies to us. Learning effectiveness isn’t about how hard you work, it’s about how clearly you can verify progress.

Here’s my 3 steps:

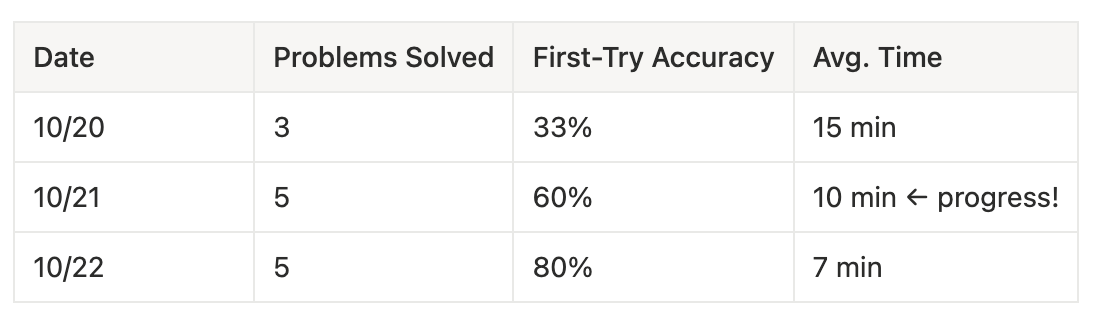

① Set verifiable micro goals

Instead of vague goals like “understand SQL JOINs”, I’ll say:

“Within 30 minutes, solve LeetCode #175, #181, and #182 independently.”

The more specific and trackable the goal, the easier it is for your brain to know whether you’re improving.

② Build instant feedback loops

Write query → Run test → Check result

├─ ✅ Pass: log as “first try correct”

├─ ❌ Fail: debug, revise, retest

└─ Use EXPLAIN to confirm execution time < 100ms?

③ Quantify progress

It’s basically a gamified learning loop.

💡Maybe every learning system should feel like Duolingo? With immediate feedback, visible progress, tight reinforcement cycles.

3. Be ruthless about information quality

AI’s bottleneck is data quality. Ours is, too.

Low-quality, unverified information pollutes our judgment.

So I built a few filters:

Firsthand sources first. That’s why founder essays and builder threads are gold — they come from the field, not the echo chamber.

Cap your sources. For each topic, follow 3–5 high-signal thinkers. If they stop teaching you something new, unfollow.

Search deliberately. Don’t scroll passively. Don’t let algorithms feed you content; go find what you need.

The point isn’t to know more. It’s to learn better.

4. Use AI with restraint

AI boosts productivity, but it weakens your thinking if you overuse it.

Thinking requires friction, and writing is the best friction there is. I drafted these two articles by hand first. That “thinking while writing” quality — no AI can replace it.

But that doesn’t mean avoiding AI altogether.

The key is to treat it as a starting point, not an endpoint.

When you’re stuck, use AI to cold start. No need to stare at a blank page.

But don’t stop at its output. AI writes like the internet averaged out — clear but mediocre.

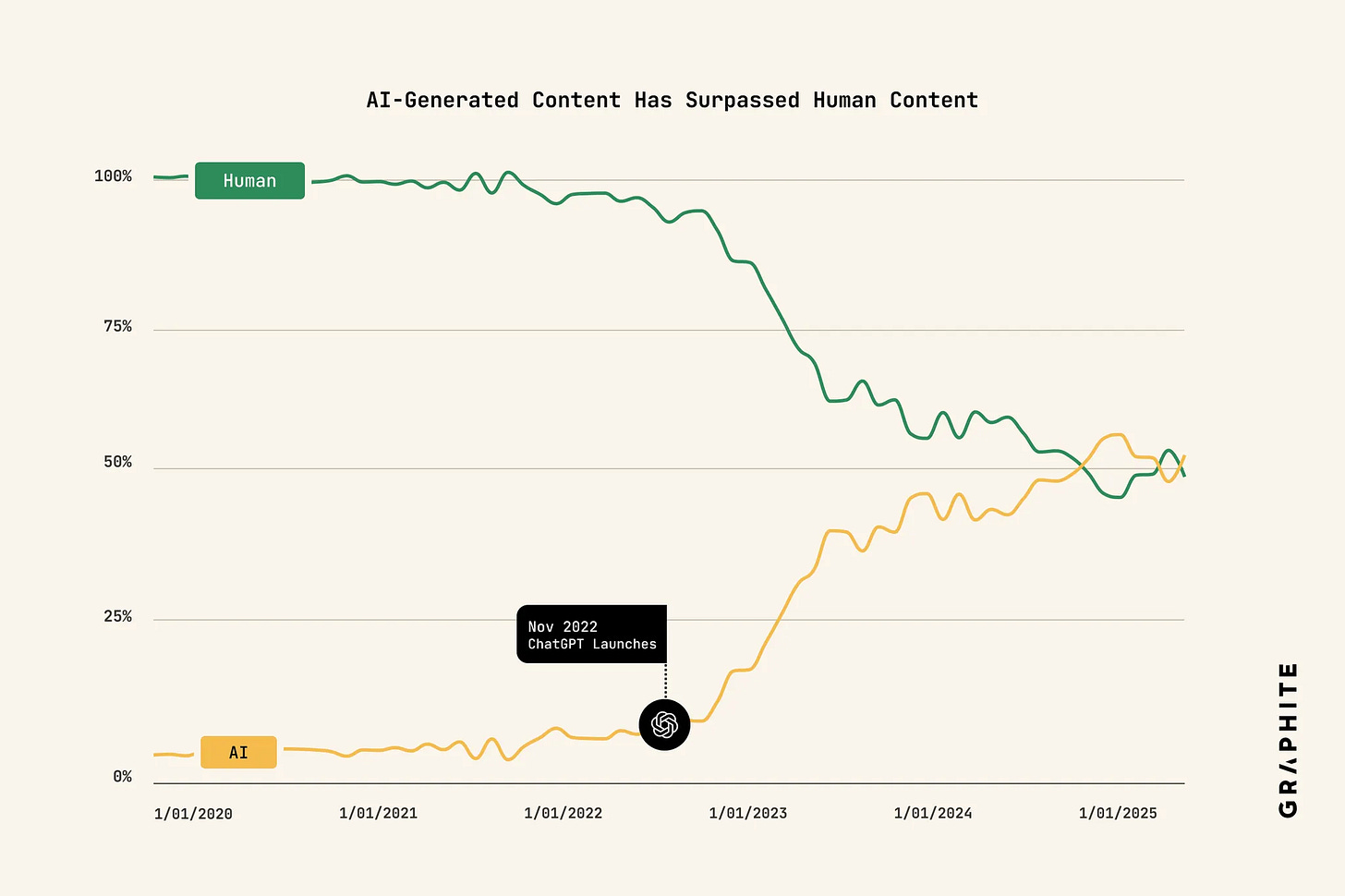

Now that AI-generated content floods the web, mediocrity is the real risk.

Our edge is what we do beyond the AI output — the thinking, judgment, and creative synthesis only humans can do.

That’s where real learning happens.